Takeaways from The Markup's investigation into Wisconsin's racially inequitable dropout algorithm

Wisconsin’s Dropout Early Warning System scores every middle schooler based on income, race, and more.

May 22, 2023

(Credit: Photo illustration by Gabriel Hongsdusit, photos by Rodney Johnson for The Markup)

Early warning systems that use machine learning to predict student outcomes (such as dropping out, scoring well on the ACTs, or attending college) are common in K-12 and higher education. Wisconsin public schools use an algorithmic model called the Dropout Early Warning System (DEWS) to predict how likely middle school students are to graduate from high school on time. Twice a year, schools receive a list of their enrolled students with DEWS’ color-coded prediction next to each name: green for low risk, yellow for moderate risk, or red for high risk of dropping out.

But what many people, including school administrators and teachers, don’t know is that the algorithm uses factors outside of a student’s control — like race and free or reduced lunch-price status — to generate these predictions. Our investigation found that after a decade of use and millions of predictions, DEWS may be negatively influencing how educators perceive students of color. And the state has known since 2021 that the predictions aren’t fair.

Three key findings

- DEWS is wrong nearly three quarters of the time when it predicts a student won’t graduate on time, and it’s wrong at significantly greater rates for Black and Hispanic students than it is for White students.

- The system labels students low, moderate, or high risk. Principals, superintendents, and other educators who use DEWS told us they received little training on how those predictions are generated. Students told us the high risk labels are stigmatizing and discouraging.

- A forthcoming academic study from researchers based out of the University of California, Berkeley, who shared data and prepublication findings with The Markup found that DEWS has had no effect on graduation rates for students it labels high risk. But if schools used it to prioritize certain students, it could lead to students of color being “systemically overlooked and de-prioritized.”

The background: Wisconsin’s racial gaps

- Wisconsin’s public school system has some of the country’s worst racial gaps. Last year, 94 percent of White students graduated on time, compared to 82 percent of Hispanic and 71 percent of Black students. The gap between Black and White students’ reading and math test scores has been the widest of any state in the nation for more than a decade.

- State officials created DEWS to help close those gaps. They hoped that by predicting which students would drop out by as early as sixth grade, educators could intervene in time to keep kids on course to graduate.

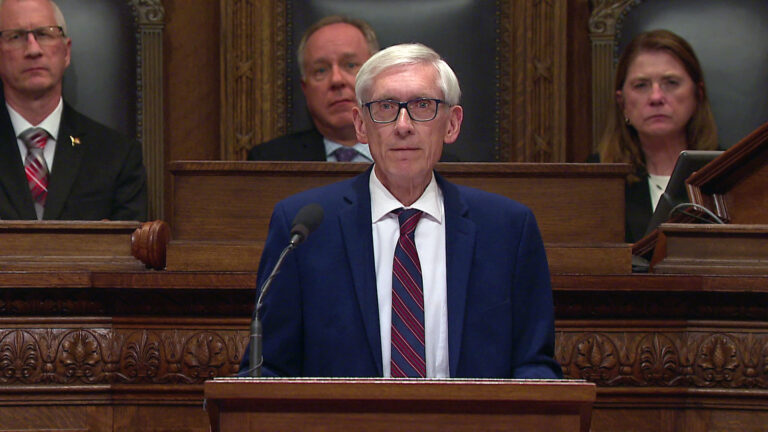

- As part of its biannual budget proposal in 2011, Wisconsin’s Department of Public Instruction (DPI), which was under the leadership of Tony Evers, who is now the state’s governor, requested $20 million for an “Every Child a Graduate” grant program that would send resources directly to struggling districts. But then-governor Scott Walker had a different plan for public education. He cut nearly $800 million, about 7 percent, in state funding for public schools from the two-year budget, including the “Every Child a Graduate” money. So, DPI looked for a high-tech solution to its graduation gap. In 2012, it began piloting DEWS.

What Wisconsin students and administrators told us

Administrators aren’t trained on how to interpret DEWS

- “They just handed us the data and said, ‘Figure it out,'” said Sara Croney, the superintendent of Maple School District.

- In the city of Racine, middle schools once used DEWS to select which students would be placed in a special “Violence Free Zone” program, which included sending disruptive students to a separate classroom.

- DPI’s DEWS Action Guide makes no mention that student race, gender, or free or reduced lunch-price status are key input variables for the algorithms.

- In response to The Markup’s questions about DEWS, staff from the School District of Cudahy originally wrote that they hadn’t known about and didn’t currently use the predictions but that counselors were “excited about this resource.” However, after reviewing our findings, the district superintendent, Tina Owen-Moore, wrote, “That certainly changes my perspective!!”

Students we interviewed were surprised to learn DEWS existed and were concerned that an algorithm was using their race to label them and predict their future

- “It makes the students of color feel like they’re separated … like they automatically have less,” said Christopher Lyons, a Black student who graduated from Bradford High School, in Kenosha, in 2022.

- Kennise Perry, 21, attended Milwaukee Public Schools, which are 49 percent Black, before moving to the suburb of Waukesha, where the schools are only 6 percent Black. She told The Markup her childhood was difficult, her home life sometimes unstable, and her schools likely considered her a “high risk” student.

- “But I feel that the difference between people who make it and people who don’t are the people you have around you, like I had people who cared about me and gave me a second chance and stuff,” Perry said. “[DEWS] listing these kids ‘high risk’ and their statistics, you’re not even giving them a chance, you’re already labeling them.”

Wisconsin DPI spokesperson Abigail Swetz declined to answer questions about DEWS but provided a brief emailed statement

“Is DEWS racist?” Swetz wrote. “No, the data analysis isn’t racist. It’s math that reflects our systems. The reality is that we live in a white supremacist society, and the education system is systemically racist. That is why the DPI needs tools like DEWS and is why we are committed to educational equity.”

In response to our findings and further questions, she added, “You have a fundamental misunderstanding of how this system works. We stand by our previous response.” She did not explain what that fundamental misunderstanding was.

How does DEWS work?

DEWS is an ensemble of machine learning algorithms that are trained on years of data about Wisconsin students — such as their test scores, attendance, disciplinary history, free or reduced-price lunch status, and race — to calculate the probability that each sixth through ninth grader in the state will graduate from high school on time. If DEWS predicts that a student has less than a 78.5 percent chance of graduating (including margin of error), it labels that student high risk.

How we reported this

To piece together how DEWS has affected the students it’s judged, The Markup examined unpublished research from the Wisconsin Department of Public Instruction, analyzed 10 years of district-level DEWS data, interviewed students and school officials, and informally surveyed and collected responses from 80 of the state’s more than 400 districts about their use of the predictions.

Our investigation shows that many Wisconsin districts use DEWS — 38 percent of those that responded to our survey — and that the algorithms’ technical failings have been compounded by a lack of training for educators. Fellow journalists, if you want to know how you can cover this subject in your area, subscribe to our newsletter so you’ll be the first to know when we release our story recipe for this investigation in the coming weeks.

This article was originally published on The Markup and was republished under the Creative Commons Attribution-NonCommercial-NoDerivatives license.

Passport

Passport

Follow Us