AI, media manipulation and political campaign lies in 2024

Political advertising on television and social media is familiar to Wisconsinites in election years, but voters in 2024 are also contending with artificial intelligence and rampant misinformation.

By Zac Schultz | Here & Now

September 26, 2024

Political groups are projected to spend $423 million dollars on campaign ads in Wisconsin in 2024, with $60 million dollars in ads for the presidential race alone reserved for airtime in the final six weeks before Election Day.

Wisconsinites may be used to attack ads stretching the truth or twisting facts, but a new concern comes with the growing use of artificial intelligence, or AI, to create an entirely new false reality.

Watch just about any local newscast in Wisconsin — when the station cuts to break, many of the commercials will be campaign ads.

“Political will occupy a large percentage of our available inventory between now and the election and have for many months,” said Steve Lavin, general manager of WBAY in Green Bay.

In a purple state, campaign ads are the best way to reach the last few voters who haven’t already made up their minds.

- A still image from a 2024 Trump campaign ad is seen, in an election cycle where political groups are projected to spend $423 million on ads in Wisconsin. (Credit: PBS Wisconsin)

- A still image from a 2024 Harris campaign ad is seen, in an election cycle where political groups are projected to spend $423 million on ads in Wisconsin. (Credit: PBS Wisconsin)

“Well, let’s be honest. The majority of the public has already decided the way that they’re going to vote. And these ads are just over that little fringe — 5 to 7% of undecideds — really undecideds in the middle,” Lavin said.

Most of the controversies over campaign ads come when one side demands their opponent retract an ad due to inaccuracies or technicalities. Lavin said TV stations have lawyers to deal with those issues.

“I’ll never pull an ad myself because, number one, I would rather the other side, if there’s falsehoods in an ad, the other side has every bit a right to actually answer those by buying more ads — right,” he said.

A new concern over ads has to do with the growing use of AI to generate images that look real.

Earlier in 2024, the Wisconsin Legislature passed a bill that requires any campaign ad using AI to include that information. It was signed into law by Gov. Tony Evers.

“Any time they’re using any type of AI, they’re supposed to disclose it,” Lavin said.

Steve Lavin, general manager of WBAY-TV, discusses the kind of advertising television stations is airing during an election year on Sept. 10, 2024 in Green Bay. “Political will occupy a large percentage of our available inventory between now and the election and have for many months,” Lavin said. (Credit: PBS Wisconsin)

Not disclosing comes with a $1,000 penalty, but that penalty would be paid by the group running the ad.

An amendment to the bill made sure broadcasters are not liable if AI is used and not disclosed.

“There is a bit of responsibility on the side of the broadcasters, but it’s also extremely hard to police,” said Michael Wagner, a professor of journalism at the University of Wisconsin-Madison, who has studied the use of AI in political speech.

“How can they know for sure the video was AI generated? How can they know for sure the script was AI generated?” Wagner asked.

Lavin said so far there’s no evidence of any AI in campaign ads.

“Since that law went into place, I have not seen or heard an ad that has used that disclaimer yet. So either it’s if it’s being used, nobody’s disclosing it, or they’ve determined that the penalties are so high that they’re just not going to use AI to determine it,” he said.

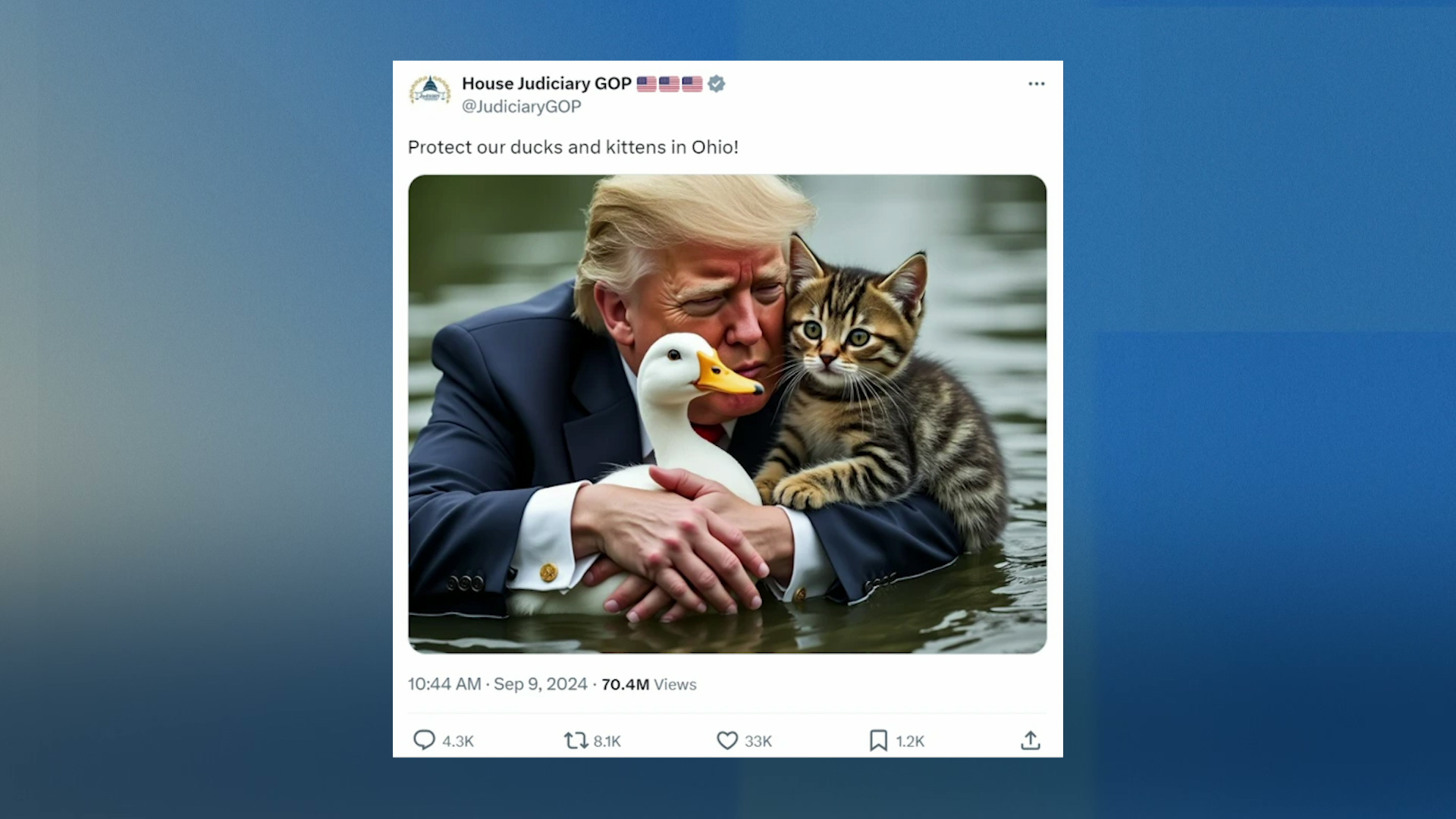

“I think the real danger with AI in this election is not in campaign advertisements. It’s in social media posts that go viral,” said Wagner.

Voters have already seen examples of this.

- A screenshot of a post to the social media platform X by the House Judiciary Committee Republicans is one example of how artificial intelligence-generated images are being used in the 2024 election cycle. (Credit: PBS Wisconsin)

- A screenshot of a post to the social media platform X by the company’s owner Elon Musk is one example of how artificial intelligence-generated images are being used in the 2024 election cycle. (Credit: PBS Wisconsin)

“Using AI as a boogeyman — so something happens, the other side says, ‘Oh, that must be AI, it can’t possibly be real,'” Wagner noted.

In August, Vice President Kamala Harris held a rally at an airport hangar in Detroit.

Former President Donald Trump falsely claimed photos of the Michigan event used AI to make the crowd look bigger.

“I spoke at that rally. I spoke to all 15,000 of those people. They are real,” said Garlin Gilchrist, Michigan’s lieutenant governor.

“Donald Trump was so insecure about that crowd size that he had to find a way to try to delegitimize it,” Garlin said. “And so by playing on the fears of people and saying it was artificial intelligence, I think that’s all he knows how to do, is play on people’s fears.”

On Aug. 20, 2024 in Chicago during the Democratic National Convention, Michigan Lt. Gov. Garlin Gilchrist discusses a rally held by Vice President Kamala Harris rally in Michigan that former President Donald Trump falsely claimed was depicted in photos using AI. “I spoke at that rally. I spoke to all 15,000 of those people. They are real,” Gilchrist said. (Credit: PBS Wisconsin)

Wagner said beyond making AI a boogeyman, there is another way artificial intelligence can be abused.

“The other is that a candidate picks up on a post that uses AI and treats it as true, which has also happened, where former President Trump shared information that Taylor Swift had endorsed him, which she had not,” said Wagner, referencing a post Trump made on his social network.

Neither AI incident seems to have affected the race for president.

Swift endorsed Harris, who has proven her large crowds are real.

“What a crowd! You know, Donald Trump says Democrats can only have large crowds because of AI,” said U.S. Rep. Mark Pocan, D-Town of Vermont, at a Harris rally on Sept. 20 in Madison.

AI wasn’t even involved in the biggest lie of the campaign.

When Trump falsely claimed Haitian immigrants were stealing pets and eating them in Springfield, Ohio, the source of the misinformation was a Facebook post — no AI involved at all.

“So, when these kinds of things happen to — especially when the candidates themselves pick it up and share it — those things are going to take on a life of their own in really remarkable and fast ways that are hard to regulate,” Wagner said.

Michael Wagner, a journalism professor at the University of Wisconsin-Madison, discusses the implications of how AI can be used in politics on Sept. 4, 2024, in Madison. “There is a bit of responsibility on the side of the broadcasters, but it’s also extremely hard to police,” Wagner said. (Credit: PBS Wisconsin)

The targets for misinformation are the same as the audience for campaign ads.

“Low information voters who are paying attention at the last minute are often susceptible to messages because they are new to them. They haven’t been paying attention to the race, and these things are new,” said Wagner.

The solution to misinformation, whether AI generated or not, is the same as it’s always been.

“There is so much disinformation out there — whether it’s in social media, it could be in these campaigns, could be spread, spreading disinformation,” Lavin said. “I think it’s up to the individual voter to determine what’s actually the truth.”

Passport

Passport

Follow Us